Mastering MLOps: A Comprehensive Guide to Questions and Answers for AI Enthusiasts

MLOps integrates DevOps principles into machine learning workflows, ensuring scalability, reproducibility, and efficiency. This blog explores MLOps concepts, tools, and challenges through detailed questions and answers. Covering key topics like pipelines, explainability, and ethical considerations, it serves as a guide for students and professionals to master MLOps and deploy robust AI systems. Click here to read more.

ARTIFICIAL INTELLIGENCE

Dr Mahesha BR Pandit

1/10/20257 min read

Mastering MLOps: A Comprehensive Guide to Questions and Answers for AI Enthusiasts

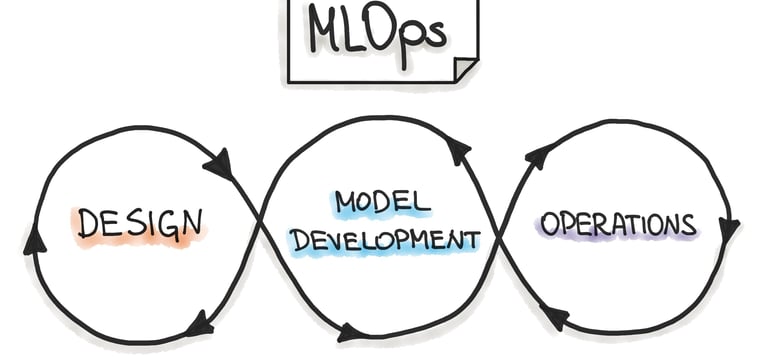

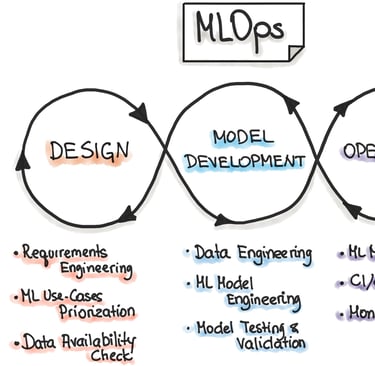

MLOps, short for Machine Learning Operations, is the practice of combining machine learning systems with DevOps principles to streamline the development, deployment, and monitoring of AI models. In the modern era of data-driven solutions, MLOps serves as a bridge between data scientists, engineers, and operations teams, ensuring scalability, reproducibility, and efficiency in machine learning workflows.

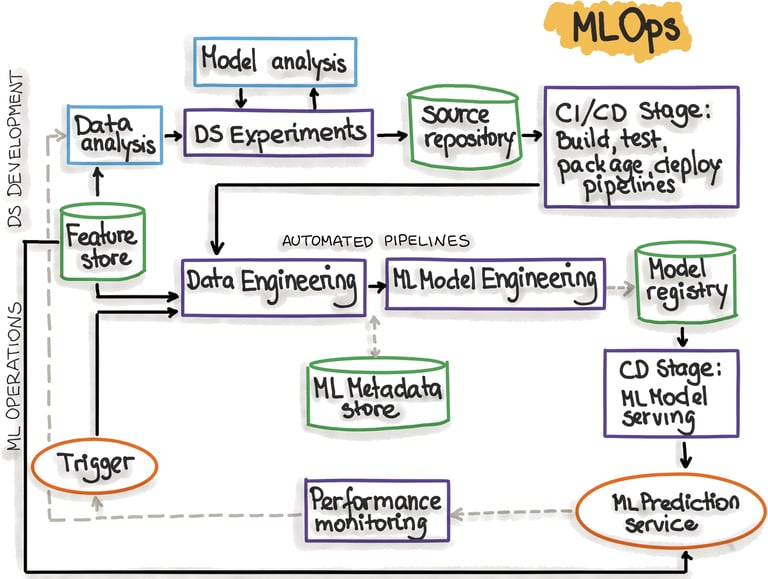

To better understand MLOps, it is helpful to compare it with its predecessor, DevOps. While DevOps focuses on improving collaboration between development and operations teams to deliver software efficiently, MLOps extends this to include the unique challenges of machine learning systems. Unlike traditional software, machine learning models depend on large datasets, require frequent retraining, and need monitoring for issues like model drift. MLOps incorporates additional components such as data engineering pipelines, feature stores, and model monitoring to address these complexities. In essence, while DevOps is about managing code and applications, MLOps also manages data, models, and their lifecycle.

Several tools are essential for building effective MLOps pipelines. MLflow, for example, supports tracking experiments, managing models, and creating model registries. Kubernetes is invaluable for orchestrating containerized applications, including ML models. Tools like TFX (TensorFlow Extended) provide end-to-end pipelines for TensorFlow models, while Feast acts as a feature store to manage and reuse features. Prometheus and Grafana enable monitoring of models and infrastructure, ensuring real-time insights. Together, these tools form a robust ecosystem for implementing MLOps workflows.

With a foundational understanding of MLOps, let us explore key concepts through short, medium, and long-answer questions. These questions and answers aim to clarify the nuances of MLOps for students and professionals alike.

Short Answer Questions

What is MLOps, and why is it important?

MLOps, or Machine Learning Operations, integrates machine learning systems with DevOps practices to automate and streamline the lifecycle of machine learning models. It is important because it ensures the reproducibility, scalability, and reliability of AI systems, enabling seamless collaboration between data scientists, engineers, and operations teams.

How does MLOps address model drift?

MLOps addresses model drift by continuously monitoring model performance and automating retraining when data patterns change. This ensures that models remain accurate and relevant over time.

What is a feature store, and how is it used in MLOps?

A feature store is a centralized repository for storing, retrieving, and reusing features used in machine learning models. It helps ensure consistency between training and production environments in MLOps workflows.

What is the role of CI/CD in MLOps?

CI/CD in MLOps automates the integration, testing, and deployment of machine learning models. Continuous integration ensures changes in code and data are tested, while continuous deployment enables seamless updates to production environments.

How does version control apply to MLOps?

Version control in MLOps applies to code, datasets, and models, ensuring traceability and reproducibility. Tools like Git and DVC help manage versions of these components, enabling consistent and collaborative workflows.

What are the key components of an MLOps pipeline?

The key components of an MLOps pipeline include data ingestion, preprocessing, feature engineering, model training, validation, deployment, and monitoring. Each stage is essential for automating and maintaining the lifecycle of ML models.

Why is monitoring critical in MLOps?

Monitoring is critical in MLOps to track the performance and health of deployed models. It ensures early detection of anomalies like model drift or latency issues, allowing timely corrective actions.

What is model drift?

Model drift refers to the degradation in model performance over time due to changes in data distribution or user behavior. MLOps tackles this through automated retraining and continuous monitoring.

What are some common tools used in MLOps?

Common tools include MLflow for experiment tracking, Kubeflow for orchestration, TFX for pipelines, Feast for feature stores, and Prometheus for monitoring. These tools streamline MLOps workflows.

How does MLOps enhance collaboration?

MLOps enhances collaboration by providing shared tools, version control, and standardized workflows that allow data scientists, engineers, and operations teams to work together efficiently.

Medium Answer Questions

Explain the difference between DevOps and MLOps.

DevOps focuses on improving the collaboration between development and operations teams to deliver software faster and more reliably. It involves practices like continuous integration, continuous deployment, and infrastructure as code. In contrast, MLOps extends these principles to include machine learning-specific challenges, such as managing datasets, training models, and monitoring model performance. While DevOps primarily deals with code and applications, MLOps also manages data pipelines, model registries, and continuous training workflows. The inclusion of these additional components makes MLOps essential for deploying and maintaining AI systems.

Discuss the importance of reproducibility in MLOps.

Reproducibility is a critical aspect of MLOps, ensuring that machine learning experiments can be replicated under the same conditions. It enables teams to debug issues, audit results, and maintain trust in AI systems. MLOps tools like MLflow and DVC (Data Version Control) help track experiments, version datasets, and store metadata, making reproducibility achievable even in complex workflows. Reproducibility also facilitates collaboration, as team members can work on shared experiments with confidence.

What role do monitoring tools play in MLOps?

Monitoring tools in MLOps, such as Prometheus and Grafana, play a crucial role in tracking model performance, system metrics, and data pipelines. They provide real-time insights into issues like latency, prediction errors, and model drift. Effective monitoring ensures that deployed models operate reliably and that any anomalies are detected early, preventing business disruptions.

How does MLOps handle model lifecycle management?

MLOps handles model lifecycle management by automating stages like training, validation, deployment, monitoring, and retraining. Model registries track versions, metadata, and performance metrics, ensuring seamless transitions between stages and reproducibility.

Why is data quality important in MLOps?

Data quality is critical in MLOps because the accuracy and reliability of machine learning models depend on high-quality data. Poor-quality data leads to biased models, incorrect predictions, and diminished trust in AI systems. Tools like Great Expectations help validate and maintain data quality.

What is the purpose of model registries in MLOps?

Model registries in MLOps serve as centralized repositories to store, version, and manage machine learning models. They ensure reproducibility, track performance metrics, and enable collaboration by providing a single source of truth for models.

How does MLOps ensure scalability?

MLOps ensures scalability by leveraging cloud platforms, container orchestration tools like Kubernetes, and distributed computing frameworks. These technologies enable efficient handling of large datasets, complex models, and high-demand prediction workloads.

What is continuous training in MLOps?

Continuous training in MLOps refers to the practice of automatically retraining models when new data becomes available. This ensures that models adapt to changing patterns and maintain accuracy over time.

How does MLOps address compliance and security?

MLOps addresses compliance and security by implementing data governance policies, ensuring traceability of datasets and models, and using secure deployment practices. Tools like TensorFlow Privacy enhance data protection in ML workflows.

What are some challenges in implementing MLOps?

Challenges in implementing MLOps include high initial setup costs, the complexity of integrating tools, managing large datasets, ensuring reproducibility, and handling cross-functional collaboration between teams with different expertise.

Long Answer Questions

Design an end-to-end MLOps pipeline for a predictive analytics use case.

An end-to-end MLOps pipeline for predictive analytics involves several interconnected stages. First, data ingestion captures raw data from multiple sources such as databases, APIs, or streaming platforms like Kafka. This data is then preprocessed to handle missing values, normalize features, and create derived variables. Feature engineering follows, storing reusable features in a feature store like Feast to maintain consistency between training and production environments.

The next step is model training, where algorithms like Gradient Boosting or Random Forest are trained using frameworks such as Scikit-learn or TensorFlow. After training, the model undergoes validation to evaluate metrics like accuracy, precision, and recall. Once validated, the model is deployed as a containerized service using Docker and orchestrated with Kubernetes. Real-time predictions are served through APIs built with Flask or FastAPI.

Monitoring ensures the reliability of the deployed model. Tools like Prometheus track system metrics, while Grafana visualizes performance trends. CI/CD pipelines automate updates, ensuring seamless integration of new data and retrained models. This pipeline provides scalability, reproducibility, and continuous improvement for predictive analytics systems.

Explain the importance of explainability in MLOps.

Explainability is essential in MLOps to ensure transparency and trust in AI systems. It allows stakeholders to understand how models make predictions, addressing concerns about bias and fairness. In critical domains like healthcare and finance, explainability is vital for compliance and decision-making. Tools like SHAP and LIME provide insights into feature importance and model behavior, making AI systems more interpretable. By integrating explainability into MLOps workflows, organizations can build trust with users and regulators while improving model accountability.

Discuss the challenges of implementing MLOps in resource-constrained environments.

Implementing MLOps in resource-constrained environments, such as startups or edge computing scenarios, presents several challenges. Limited budgets may restrict access to advanced tools like Kubeflow, and edge devices often lack the computational power required for large-scale ML models. Scalability is another concern, as constrained environments struggle to handle increasing workloads. Additionally, the lack of expertise in MLOps tools and practices can hinder implementation. To address these challenges, organizations can adopt lightweight tools like MLflow, use cloud-based resources judiciously, and focus on automating critical parts of the pipeline to reduce manual overhead.

What are the ethical considerations in MLOps?

Ethical considerations in MLOps include ensuring fairness, avoiding biases in models, and maintaining user privacy. It is essential to audit data sources, implement explainability techniques, and adhere to legal regulations like GDPR. Ethical AI practices build trust and prevent harm caused by biased or inaccurate predictions.

How does MLOps facilitate multi-model management?

MLOps facilitates multi-model management by using model registries and orchestration tools to handle multiple models simultaneously. It tracks model versions, performance metrics, and deployment environments, ensuring efficient updates and scalability across applications. Tools like MLflow and Kubeflow simplify managing diverse models.

What role does automation play in MLOps?

Automation is a cornerstone of MLOps, enabling efficiency and consistency in repetitive tasks like data preprocessing, model training, and deployment. Automated CI/CD pipelines ensure quick updates, while monitoring tools detect issues in real-time. Automation reduces manual errors and accelerates the machine learning lifecycle.

How does MLOps support real-time inference?

MLOps supports real-time inference by deploying models as APIs in low-latency environments. Tools like TensorFlow Serving and FastAPI ensure predictions are delivered in milliseconds, making MLOps suitable for applications like fraud detection and recommendation systems. Efficient monitoring ensures reliability in production.

What is the future of MLOps?

The future of MLOps lies in increased automation, integration with edge computing, and advancements in AI governance. As ML models grow in complexity, MLOps will emphasize ethical practices, scalability, and transparency. Emerging tools will further streamline workflows and adapt to evolving technological landscapes.

Discuss a case study where MLOps improved an organization’s workflow.

At Airbnb, MLOps transformed their workflow by automating model deployment and monitoring for personalized recommendations. By using Kubeflow for pipelines and MLflow for tracking, they reduced deployment time by 80 percent and improved model accuracy. This streamlined their ability to scale and maintain reliable AI systems.

Conclusion

MLOps is a transformative practice that combines the best of DevOps and machine learning to deliver robust AI systems. Through questions and answers, this blog has explored foundational concepts, tools, and challenges, providing a comprehensive guide for students and professionals. Mastering MLOps is key to unlocking the full potential of AI in real-world applications.

Image Courtesy: MLOps, https://ml-ops.org/content/mlops-principles