Feature Selection versus Dimensionality Reduction: A Clearer Perspective

Curious about simplifying complex data for better machine learning models? Dive into the world of feature selection and dimensionality reduction—two powerful techniques with distinct approaches. Learn how they streamline data, balance interpretability with performance, and tackle high-dimensional challenges. Uncover which method fits your needs and why. Click to explore more!

ARTIFICIAL INTELLIGENCE

Dr Mahesha BR Pandit

9/8/20242 min read

Feature Selection versus Dimensionality Reduction: A Clearer Perspective

The world of machine learning often feels like navigating a dense forest of concepts, and among these, feature selection and dimensionality reduction can be particularly confusing. They are tools that help simplify data, making it more manageable and improving model performance. Although they serve similar purposes, they take very different paths to achieve their goals.

Understanding Feature Selection

Feature selection is the art of picking the most relevant features from your dataset. Think of it as choosing the key ingredients for a recipe. You might have a pantry full of options, but only a handful are needed to cook the dish effectively. Feature selection does not alter the original data; it filters out the noise, leaving behind the variables that carry the most weight.

This process relies heavily on understanding the data and its relationship with the target variable. Statistical tests, algorithms, or even domain knowledge can guide the selection process. By focusing only on relevant features, feature selection reduces overfitting, speeds up computations, and enhances interpretability. The remaining features are not just useful—they are the stars of your dataset.

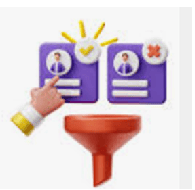

Exploring Dimensionality Reduction

Dimensionality reduction takes a different approach. Instead of selecting from the existing features, it transforms them. Imagine having a complex jigsaw puzzle and rearranging the pieces to create a simpler but equally meaningful image. This process combines features, often compressing them into fewer dimensions while retaining the essence of the data.

Techniques like Principal Component Analysis (PCA) or t-SNE are commonly used for dimensionality reduction. These methods mathematically project data into a lower-dimensional space, capturing the most significant patterns and structures. While this can dramatically reduce the dataset’s complexity, the transformed features often lose their original interpretability, becoming abstract combinations of the original variables.

When to Choose One Over the Other

The choice between feature selection and dimensionality reduction depends on the problem at hand. If interpretability is a priority, feature selection often wins. Keeping the original features intact allows a clearer understanding of how each one influences the outcome. This can be critical in fields like healthcare or finance, where transparency is essential.

On the other hand, when datasets are extremely high-dimensional, such as in image or text processing, dimensionality reduction often becomes a practical necessity. By summarizing the data into fewer dimensions, it reduces the computational burden while preserving the critical information needed for model training.

Striking a Balance Between Simplicity and Performance

Both feature selection and dimensionality reduction share a common goal: simplifying data without losing its meaning. However, the path chosen has implications for the model’s performance and interpretability. The key lies in understanding the dataset, the problem requirements, and the trade-offs between simplicity and depth.

In some cases, these techniques can complement each other. Feature selection can be used to remove irrelevant variables, followed by dimensionality reduction to compress the remaining data. This layered approach balances the strengths of both methods, allowing for streamlined and effective machine learning workflows.

Conclusion: Two Paths to Clarity

Feature selection and dimensionality reduction are not opposing concepts but rather tools that cater to different needs in data processing. By recognizing their unique strengths and limitations, you can make informed decisions that align with your project’s goals. Simplifying data is not just about reducing numbers—it is about retaining what matters most while crafting a model that performs effectively and resonates with the problem you are solving.