Dynamic Scaling of AI Workloads in Serverless Architectures

Discover how serverless architectures are reshaping the way AI workloads are managed. Learn about the challenges of scaling AI, the power of dynamic resource allocation, and how serverless platforms enable seamless scalability and efficiency. Dive into this blog to explore the future of AI infrastructure and its transformative potential.

ARTIFICIAL INTELLIGENCESOFTWARE ARCHITECTURE

Dr Mahesha BR Pandit

1/13/20253 min read

Dynamic Scaling of AI Workloads in Serverless Architectures

Artificial Intelligence has emerged as an essential tool across industries, driving innovation and efficiency. As AI applications grow in complexity, the need for infrastructure that can adapt to unpredictable demands becomes critical. Serverless architectures have become a key solution, enabling dynamic scaling for AI workloads without the burden of managing servers manually. This approach shifts the focus back to development and deployment, where it truly belongs.

Understanding Serverless Computing

The term serverless does not mean there are no servers involved. Instead, it refers to a cloud-based approach where infrastructure management is handled by a provider. Developers can focus on their applications while the provider takes care of provisioning, scaling, and maintaining the servers. Users are charged based on the resources they consume, which makes serverless computing an efficient choice for workloads that experience variable demand.

AI workloads, which are often compute-intensive, stand to gain significantly from this model. Tasks such as training machine learning models or running inference on large datasets require flexible and responsive infrastructure. Serverless platforms provide this flexibility by scaling resources up or down in response to workload requirements, ensuring efficient resource usage without over-provisioning.

The Demands of AI Workloads

Artificial Intelligence workloads come with their own set of challenges. Whether it is training complex neural networks or analyzing real-time data, these tasks require substantial computational resources. The demands are not constant; for instance, a chatbot may see a surge in activity during certain hours while remaining idle at other times. Traditional setups struggle to cope with such fluctuations, often leading to either underutilized or overwhelmed systems.

Dynamic scaling addresses this issue effectively. Serverless architectures can allocate resources on demand, ensuring that applications perform optimally regardless of the workload. This eliminates the inefficiencies of fixed resource allocation, providing a cost-effective and reliable solution.

How Serverless Supports AI Scalability

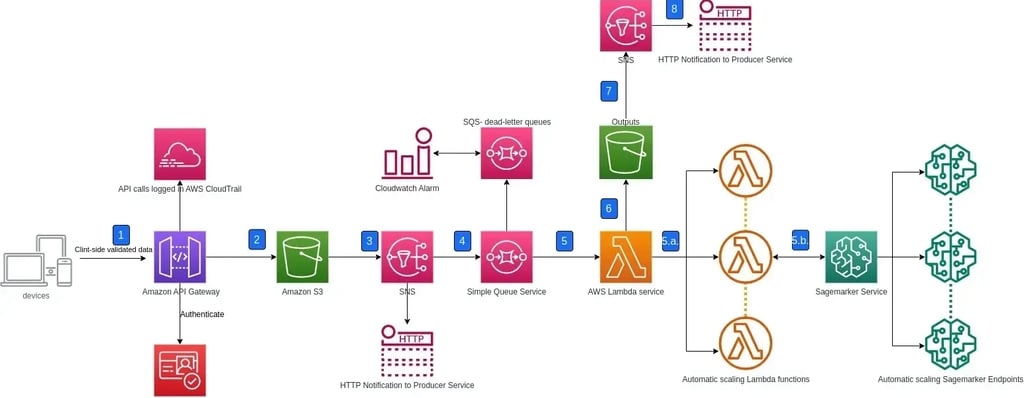

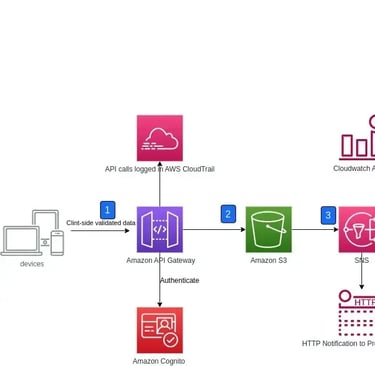

Platforms like AWS Lambda, Google Cloud Functions, and Azure Functions have been designed with scalability in mind. They allow AI applications to handle tasks such as preprocessing data, running inference, or even training lightweight models without manual intervention. For example, when an AI-driven application experiences a sudden increase in user activity, the serverless platform automatically provisions additional resources to maintain performance levels.

The integration of serverless functions with other cloud services further simplifies the development process. Data storage, messaging, and analytics tools can be seamlessly connected, enabling smooth workflows and reducing overhead. This interconnected ecosystem ensures that AI workloads can operate efficiently from start to finish.

Challenges in Adopting Serverless for AI

While the benefits are clear, adopting serverless architectures for AI workloads is not without challenges. One common issue is cold start latency, which occurs when a function is initialized after being idle. For applications requiring real-time responses, such delays can impact performance. Techniques like keeping functions warm or using dedicated services for latency-sensitive tasks can help mitigate these effects.

Another consideration is cost management. Although serverless computing charges based on usage, tasks with high frequency or heavy resource demands can result in significant expenses. Careful planning and workload optimization are essential to ensure a balance between performance and cost.

The Future of AI Workloads in Serverless Architectures

The combination of serverless architectures and AI is shaping the future of application development. As cloud providers continue to enhance their offerings with faster hardware, optimized runtimes, and reduced latency, the ability to scale AI workloads dynamically will improve even further. This alignment of technology with evolving needs offers a promising path forward for developers and organizations alike.

Dynamic scaling is no longer a luxury but a necessity for AI-driven applications. Serverless computing provides the tools to meet this need, enabling businesses to innovate without worrying about infrastructure constraints. The potential of serverless platforms to revolutionize AI workloads is immense, and it is only the beginning of what is possible in this space.

Image Courtesy: https://community.aws/content/2bK1nGto91hTwNN7HxbWudui5D1/architecting-scalable-and-secure-ai-systems-an-aws-serverless-inference-workflow